JupyterHub/Kubernetes: Accessing the Spark UI via the Hub Proxy

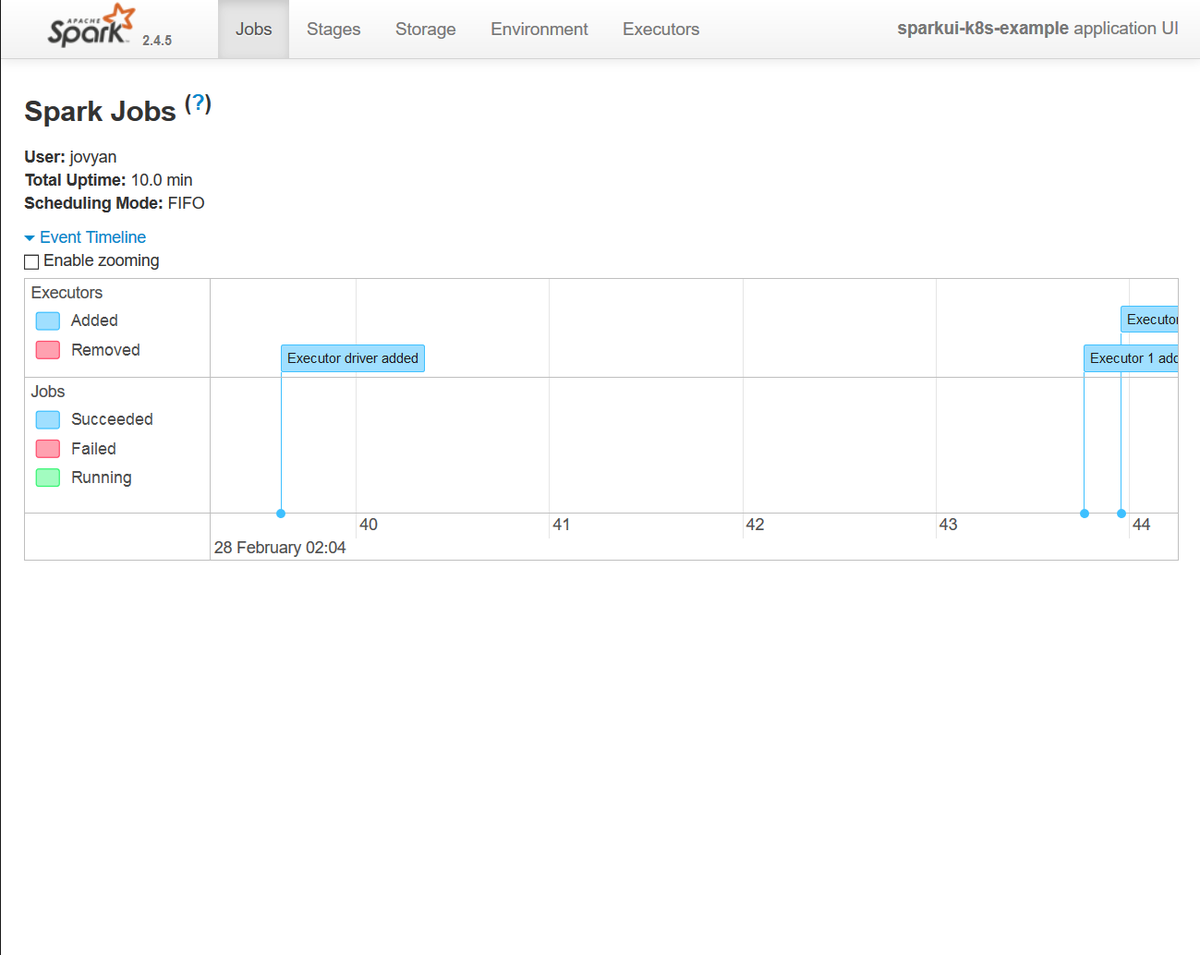

To work efficiently with spark, users need access to the Spark UI, a web page (usually running on port 4040) that displays important information about the running Spark application. It includes a list of scheduler stages and tasks, a summary of RDD sizes and memory usage, environmental information, and information about the executors and their status. Traditionally, this interface is accessed by opening http://driver-node:4040 in a web browser. If there are multiple SparkContexts running on the same machine, the web UI will bind to successive ports.

For Jupyter applications running within Kubernetes, accessing the Spark UI is difficult. With most deployments, there is a service or ingress that proxies traffic to the user's Jupyter pod, and the configuration only allows access to the main user interface with no obvious way to access the Spark UI running on port 4040.

Workaround: Utilize jupyter-server-proxy

If the Jupyter environment is managed via JupyterHub (an application that provides support for spawning notebook servers for multiple users), there is an option that can allow you access to the Spark UI (with a little help from a plugin). There is a JupyterLab extension called jupyter-server-proxy that allows you to run arbitrary external processes in the same container as your notebook, and utilize Jupyter itself to proxy traffic to a specific port via proxy endpoint. jupyter-server-proxy supports authentication, which is provided via JupyterHub and its configurable HTTP proxy.

To make this work requires two steps:

- Extend the Jupyter Docker container image to include the

jupyter-server-proxyextension frompip - Configure the Spark driver so that it is able to work with the proxy.

Extend the Docker Image

The jupyer-server-proxy package can be installed either using pip or conda (in the source listing example, we do so using pip). After install, it needs to be activated via the jupyter serverextension enable ... command.

The code listing only contains the commands needed to deploy and configure the proxy. For a complete reference showing the creation and deployment of a complete Jupyter Image for Kubernetes, please refer to Part 2, Part 3, and Part 4 of Jupyter on Kubernetes. For a pre-configured environment, refer to the Oak-Tree Big Data Docker Lab.

# Note: the following lines should be added to an existing Dockerfile # Install jupyter-server-proxy RUN pip3 install jupyter-server-proxy \ && jupyter serverextension enable --sys-prefix jupyter_server_proxy

Spark Configuration

To configure the application and Spark UI to work with the proxy, there are a number of settings which have to be provided to the runtime. These include configuration parameters passed to the SparkContext and options added to the environment. The code listing below shows an example SparkConf that provides the additional details. The example code is intended to be added to the top of a Jupyter Notebook and can be broadly re-used after modifying they options under the "General Settings" section.

Logic:

- To make the code as reusable as possible

osandsocketare used to lookup (or set) information within the environment. These include parameters such as the pod's IP address. - For the driver to start, the Spark configuration needs to have the Spark Master (Kubernetes API), namespace, executor container image, and service account. Of special import are the Kubernetes namespace and service account, the application will not be able to start unless you have configured a service account with the admin RBAC.

# Import OS and Spark Dependencies import os, posixpath, socket from pyspark import SparkConf, SparkContext # General Settings SPARK_EXECIMAGE = 'code.oak-tree.tech:5005/courseware/oak-tree/dataops-examples/spark245-k8s-minio-base' K8S_SERVICEACCOUNT = 'spark-driver' K8S_NAMESPACE = 'jupyterhub' SPARK_EXECUTORS = 2 # Set python versions explicitly using the PySpark environment variables # to prevent the executors from using the wrong version of Pytho9 os.environ['PYSPARK_PYTHON'] = 'python3' os.environ['PYSPARK_DRIVER_PYTHON'] = 'python3' # Spark Configuration conf = SparkConf() # Set Spark Master to the local Kubernetes Driver conf.setMaster('k8s://https://kubernetes.default.svc') # Configure executor image and runtime options conf.set('spark.kubernetes.container.image', SPARK_EXECIMAGE) conf.set("spark.kubernetes.authenticate.driver.serviceAccountName", K8S_SERVICEACCOUNT) conf.set("spark.kubernetes.namespace", K8S_NAMESPACE) # Spark on K8s works ONLY in client mode (driver runs on client) conf.set('spark.submit.deployMode', 'client') # Executor instances and settings conf.set('spark.executor.instances', SPARK_EXECUTORS) # Application Name conf.setAppName('sparkui-k8s-example') # Set IP of driver. This is always the user pod. The socket methods # dynamically fetch the IP address. conf.set('spark.driver.host', socket.gethostbyname(socket.gethostname())) # Initialize the SparkContext sc = SparkContext(conf=conf)

Access the Spark Web UI

After the Spark interface is running you can access the web interface via JupyterHub using a browser and a URL similar to the one below:

http://{{ jupyerhub-domain }}/user/{{ username }}/proxy/4040/jobs/

Example:

https://hub.example.com/user/roakes/proxy/4040/jobs

Note the trailing slash after jobs/. If not present, JupyterHub will not route the request correctly and you will get a 404 error.

Comments

Loading

No results found